VET Reform – Our Position

Do we need VET Reform or continuous improvement? The short answer: we need VET Reform. But what we really need is a continuous improvement approach to the VET system.

The first image that comes alive in this fragmented and incomplete VET picture is that the current system is not effectively promoting quality, but a race for compliance. And, compliance as a goal, in itself, can be as dangerous as anarchism.

The current regulatory system (not only the legal framework, but the performance of several stakeholders) is, in reality, a process-focused system, not an outcome-focused one as described since the endorsement of AQTF 2007. The regulator’s audits are focused on evidence that shows whether the RTO is following “compliant” procedures, but do not assess how those procedures affect the learning transferred to students, or follow the application of the new skills attained in the workplace.

Here is our first point for VET Reform:

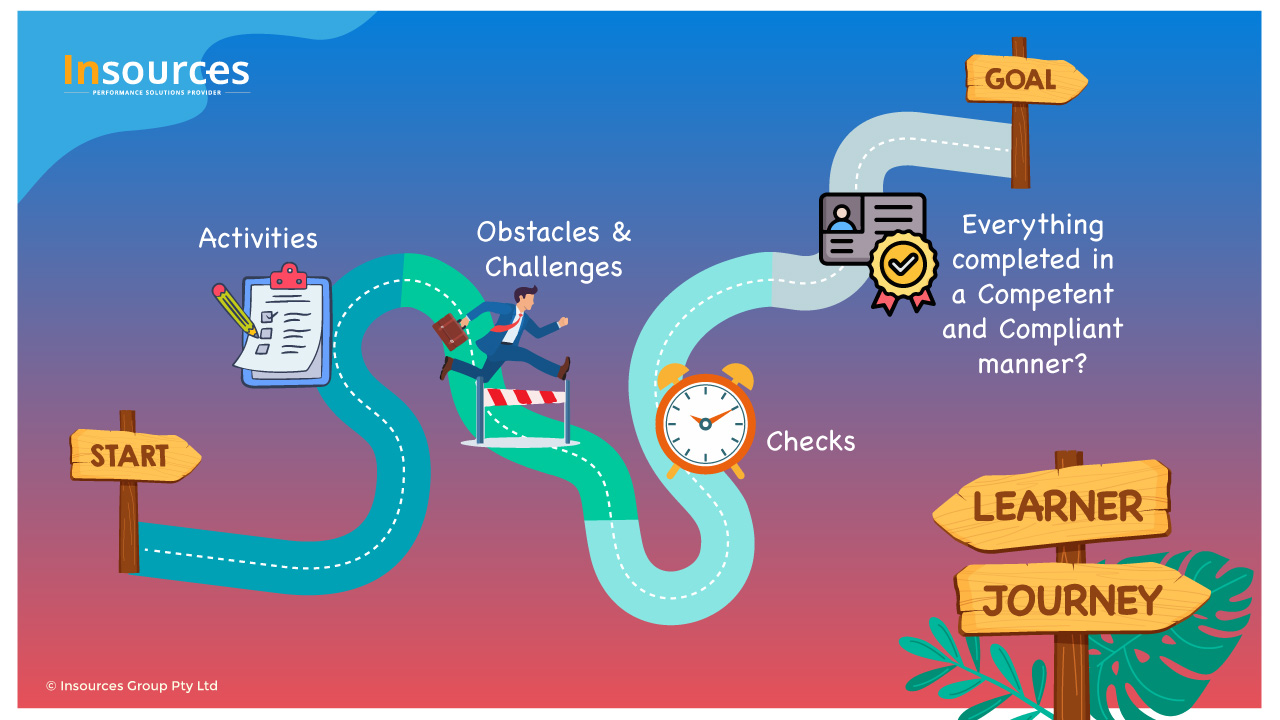

- VET must be an outcome-focused system, where the only variable that is not negotiable is learning the competencies required by the industry and described in the national training packages.

Everything else can be variable and/or flexible; the variables that affect the learning journey (timetable, delivery method, media, etc.) should be adjusted to meet the needs of specific target groups and the industry. There is only one thing that is not negotiable: learning the competencies.

The current regulatory system is unable to identify cases where learning hasn’t occurred, or the skills learnt do not meet current industry needs. Instead, the system identifies and congratulates those RTOs that generate a considerable amount of evidence of completed procedures, but not necessarily successful and industry relevant training.

Why? One of the reasons, not the only one, is the way the standards are written: process-driven, not outcomes-driven. SNR 15.5 states “assessment including RPL meet: meet the requirement… …is conducted in accordance with… …is systematically …”

SNR 15.5 is a mix of best practice with legislation, and in the process is not comprehensive enough to qualify for an effective “best practice” document, neither specific enough for the auditor to regulate the outcome of the assessment process. The critical point for SNR 15.5 should be: Does the RTO have enough evidence to determine that each person who received a certificate/statement of attainment has achieved the competencies? And probably a set of specific indicators showing what evidence is required to determine quality and quantity, even a note explaining what evidence is not appropriate for that purpose.

We have seen many cases where the regulator’s auditor focused on the assessment process, (matrixes, procedures, checklists, forms) but not on assessing the evidence itself, or observing students’ work, or interviewing students, or employers in the case of apprenticeships. Why? Because the regulatory approach is process-based, process-driven, not outcomes-driven as it should be.

It is clear the RTO must have an assessment system, follow the principle of assessments and assessment evidence must be valid, sufficient, current, and authentic, and those procedures can and must be audited, but that should not be the only focus of the audit.

The regulator must audit the final product of the training and assessment process: learning the competencies. (Competent individuals that can add value to our industry performing technical tasks, working in teams, using IT tools, solving problems, communicating effectively in English, contributing to an innovative environment, etc.) Until then, the regulator will have limited impact on the quality of training and the regulatory framework will be continue to be ineffective. Definitely not the return that all stakeholders expect for the cost we pay for regulation.

A lot has been said about over-regulation. What is over-regulation? It can be seen as the use of rules that don’t provide any benefit to the outcome that is regulated. For our industry the only outcome that matters is learning the competencies.

Many areas of our current standards, or ASQA’s interpretations of current standards, add administrative cost to RTOs but no value to the final outcome whatsoever. Those measures are promoting a divorce between quality and compliance and are inducing a negative approach to compliance.

At the beginning of this presentation, we mentioned the half of the picture that we know, because there is another half that we don’t know. Vocational training is meant to produce changes in the workplace and impact in business measures. How much do we know about those outcomes? How much have we measure? We see continuous updates in units of competency and qualifications, but where is the data about implementing the units of competency and qualifications to support those changes. How was the workplace affected? What changed? Improved productivity? Reduced accidents? Skills shortages addressed? Reduced absenteeism? Reduced processing times? Where is that data?

For example, how did TAE40110 affect our industry? Has it reduced compliance issues? Has it increased students’ completion rates? Has it improved students’ satisfaction? Has it contributed to create better learning environments? Has it reduced training design costs for RTOs? Where is that data?

How can our industry continue making changes in training packages, and updating versions without that data? Are we flying blind? I invite you to check the features of versions 3 and 4 of the TAE10 training package and answer the question: What is the value of the changes?

If we are flying blind we are more susceptible to becoming confused in this process-driven regulatory web.

That is our second recommendation for VET Reform:

- The Strategic Plan of each industry must be supported by data that shows the impact of implementing training.

If we don’t have data about how VET is affecting the industry, including clear measures, we don’t have a case for training and we risk running in circles wasting a tremendous opportunity to support the Australian economy.

Industry Skills Councils should be accountable for this process, and must spend time and effort collecting, analysing, and communicating the data. We do recognise the effort and resources allocated to the “environmental scans” produced by ISCs. But what information is included in those reports? Input data (number of enrolments, cost of enrolments, number of visas to overseas students, number of employees, number of RTOs, etc.), general forecasts about future trends, but not data that can be used to plan effective training.

The continuous improvement plan for those training packages has not been implemented at the highest levels of evaluation: application of learning, impact of learning in the workplace, and return on investment. Instead, the allocation of significant amounts of resources and time and strategic pathways has been designed based on input data, reaction levels and completion rates.

Do we have a national consensus approach about what we want to create in the workplace? Have we planned how are we going to measure the results? Have we measured those results so far? How do we know we are on the right path?

For example, the latest environmental scan talks about LLN issues, and I wonder what impact the TAELLN401A and/or TAELLN411 units have had? What has improved? Which measures have been used? Are people with basic levels of language, literacy, and numeracy skills now being supported by RTOs? How can we measure that? In the last few years, some qualifications have been created at level AQF 7 and 8, but what effect have they had? We changed from TAA to TAE; what difference has that made? I can’t find answers to those questions and again think we are flying blind.

Another consequence is the cost of implementing these programs. The change from TAA to TAE required a significant investment, and I wonder what was the return on that investment. According to the latest estimations there are around 200,000 individuals working as trainers/assessors in our industry. How much did the industry invest in the TAA to TAE update? What was the benefit for the industry? The update to the LLN unit is another significant investment; again what are we getting out of that costly exercise?

This takes us to our third recommendation for this VET Reform:

- Require a return on investment study for public funding.

The credibility of the system is at stake when money is poured into a nationally recognised training program and there are no noticeable changes.

We should calculate the return on investment and analyse how implementing nationally recognised training has affected key business areas such as: improving safety, improving sales, decreasing unemployment, etc. The report can include other intangibles such as: improving social inclusion, promoting further studies, improving employees satisfaction, etc.

Without those studies how can we justify the investment? How do we know that we are putting taxpayers’ money in the right program/RTO? The government, as the major client for the VET system (buys around 75% of all enrolments), must set the strategy for investment in VET, based on relevant data and not on assumptions. Pouring money (public funding) into the system without monitoring outcomes will continue to distort the industry, allowing unethical and unfair competition among training providers.

We propose to include a requirement in the Standards for developing training packages that requires a preliminary evaluation of the program, and a clear plan for return on investment evaluation that must be completed before any further update is endorsed/approved.

We also propose to include an evaluation plan that must be completed at minimum Level 3 (Application of Skills in the Workplace) with any enrolment funded by the government.

RTOs are always going to wear the blame. This takes us to our next point for the reform:

- A regulatory approach that does not build on previous experience. No continuous improvement.

Since AQTF 2005 was introduced, it seems that the standards for the delivery and assessment of nationally recognised training have been going in circles. But the perception has been the same – We must raise the bar for the RTOs.

It is time for all VET stakeholders to be accountable for the sector outcomes. Our system rests on three basic pillars:

- Regulatory framework (policy makers and regulators)

- Training packages (Industry Skills Councils), and

- RTOs.

In the past, we have dealt with ineffective regulations, ineffective auditing processes, content that does not meet industry needs, processes that do not produce industry relevant training outcomes. What is our approach to continuous improvement here? By definition, continuous improvement for our system means changes that are a consequence of collecting, analysing and acting on data from stakeholders. Changes in the regulatory framework, the standards for RTOs, Training Packages, the AQF, the Core Skills Framework, the Workplace Core Skills, the National elearning Strategy, all initiatives based on isolated reactive plans, that don’t benefit from previous experiences, and don’t work as part of the same system.

We need a system that works on continuous improvement and has a proactive approach to achieve nationally agreed goals. We need better and more consultation with all stakeholders. Typically, the consultation is opened when a new change is imminent. We need continuous and effective consultation. We need to have open forums where VET stakeholders share ideas and challenges and there is a continuous process to feed this information back into the system.

We need a government that supports the continuous improvement process providing the required platform to collect, analyse and record historical data from national consultation.

We also need a more transparent system where RTOs are not only there to be regulated, but they have the opportunity to truly contribute to the continuous improvement process.

We propose the creation of the National VET Platform (or however you want to name it) as a permanent space administered by the government that monitors how the regulatory framework has affected all stakeholders and continuously collect feedback from all stakeholders.

Finally, the principles of adult learning must be effectively embedded into the regulatory framework, and its application must be continuously monitored and improved.

As professional educators we understand and apply principles of adult education that support the work of trainers, facilitators, human capital development professionals worldwide. To guarantee the integrity of the system we must ensure the principles of communication are met along with the application of course content, instructional design, delivery methods, assessment practices, and training evaluation.

We propose to include in the new Standards 2014 the principles that underpin the integrity of the system such as: the process to interpret training packages, training needs analysis (for apprenticeships and traineeships), the instructional design process and the training evaluation process.

In summary, we believe VET Reform should concentrate on the following issues:

- Promote an outcome-focused regulatory system, where the only variable that is not negotiable is learning the competencies agreed in the training packages and required by the industry

- Ensure principles of adult learning and human capital development are effectively embedded into the regulatory framework

- Make Industry Skills Councils accountable for establishing, implementing, and monitoring relevant training solutions for the industry, and continuously report on the results of those solutions on all stakeholders

- Require a return on investment study for public funding, and

- Create a continuous improvement strategy for the VET sector.

By Javier Amaro Director of Insources Education Pty Ltd, and President of the Australian Society of Training and Development Inc (ASTDI)